Elliptic curves form a basic building block for all kinds of complex cryptographic machinery: digital signature schemes, key exchange mechanisms, zero knowledge proofs, multi-party computation, etc.

While all curves have a similar mathematical structure they can have wildly different properties in terms of security, performance, and supported arithmetic. With the increasing adoption of zero-knowledge cryptography, finding and exploiting such differences becomes increasingly prominent. Thus, the search for new elliptic curves is an active area of research in cryptography. The goal is to find curves that offer higher security, more efficient arithmetic operations, and are widening the scope of cryptographic applications.

This post goes over different methods for constructing elliptic curves, some potential applications, and some practical consideration for application-specific curve development. The reader is assumed to have a basic understanding of elliptic curve cryptography. If not consider checking elliptic curves cheat-sheet first.

Why search for new curves?

As with many things in modern cryptography, it is the rise of blockchains that sparked so many innovations related to elliptic curves as well as zero-knowledge cryptography, which subsequently pushed even more researchers to actively search for new elliptic curves. The applications described here would therefore be closely related to Web3 and ZK domains.

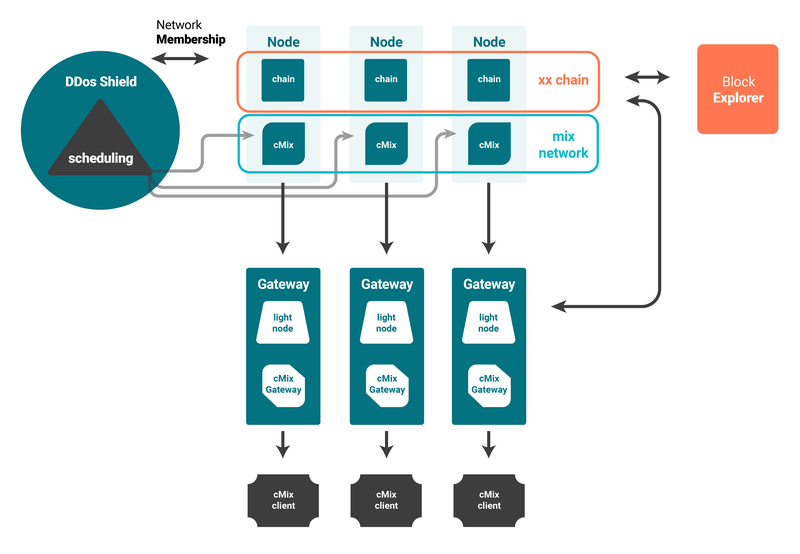

In ZK systems computation is expressed as arithmetic circuits that in turn perform their operations on a finite field Fr. This field corresponds to a scalar field of an elliptic curve whose base field is then used by the verifier in the proof verification algorithm. A verifier can be a person whom you need to prove some statement, but more commonly the verifier would be a smart contract. Blockchains that host these contracts usually support a fairly limited number of elliptic curves. Ethereum for example only has a precompiled contract for BN256. This can become a significant limitation when an in-circuit computation itself contains elliptic curve operations. Think of signature verification; checking that encrypted message has some properties, or even verifying another SNARK aka recursive proofs composition, which we discuss later.

An example to note is related to the StarkNet platform and Cairo language. The core technology powering these two is STARKs (no surprise). What's interesting is that, unlike other proof systems, STARKs only require a small prime field to operate, so researchers at Starkware invented a special STARK curve that has a very small prime field - 64 bytes. As a result, implementing cryptographic schemes over the standard curves (e.g. ECDSA over Secp256k1) would be wasteful. Unsatisfied with the status quo Mikerah from HashCloak resolved to find a new Cairo-friendly curve named Starkjub.

The solution to this kind of problem is quite simple, in fact, it's the oldest trick in the book. One can pick another curve E′ whose base field matches the scalar field of a curve E used by the verifier. This offers a great alternative to simulating foreign fields with available (unfriendly) one, referred to as non-native arithmetic. Commonly, curves found with such intent have a twisted Edwards form. They are defined over a particular form of equation, ax2+y2=1+dx2⋅y2, and are known for their efficient point addition. Many such curves were found in recent years. Some of them are given cute names like JubJub (Ed-on-BLS12-381) and funny pictures to be more memorable.

JubJub (left) and Bandersnatch (right)

Application-constraint arithmetic circuits

For some applications the aforementioned curve substitution is impossible. Think of a cross-chain bridge where a smart contract on the destination chain needs to verify signatures of the source blockchain. Another example is identity-based encryption (IBE) like the one used in the tlock scheme to achieve practical time-lock encryption facilitated by Drand threshold network. Recently I've set to make such encryption verifiable and quickly realized that performing arithmetic on BLS12-381, which Drand operates on, is very inefficient with existing tools. Search for a better alternative brought me into this rabbit hole.

The discovered solution is the opposite of the one we've just discussed. Here a curve must be picked, whose scalar field matches the projective curve's base field. Depending on the proving system, there can be another important requirement: systems that rely on KZG commitment scheme, such as Groth'16, PLONK require pairing-friendly curves to operate. The reason is that KZG proof verification algorithm requires elliptic curve point multiplication which is not allowed in traditional ECC. However, when both points are from pairing-friendly curve G1 and G2 and there exists a bilinear map between their respective groups of points, then it's possible to map these points into a target group GT point, which acts as a product G1×G2→GT.

The known methods for finding pairing-friendly curves whose scalar field embeds other curve's base field are Cocks–Pinch (CP) and Dupont-Enge-Morain (DEM), and we will be taking a closer look at them later in the article. In the previously mentioned time-lock IBE project, the Cocks-Pinch method was used to find a curve that embeds BLS12-381 scalar field, which I named YT6-776 curve aka "Yeti".

When two curves E1 and E2 satisfy r1=#E2(F2) where r is size of the largest prime subgroup (scalar field), then they are referred to as 2-chain. If to keep adding embedding curves satisfying the given condition, the resulting set of curves E1,E2,...,En would be an n-chain.

Recursion substrate for ZKPs

Recursive ZKPs is a short way of saying that one proof attests to the validity of another one, i.e. "proof of a proof". For this, an arithmetic circuit of the outer proof must implement the verification algorithm of an inner proof. If both outer and inner proofs are based on the same proving system we say it's a recursive proof composition, otherwise just a proof composition. When proof verifies a proof just once or a bounded number of times then it's one-layer or N-layer recursion respectively.

The reason why recursion is challenging relates closely to the aforementioned problems. Instead of repeating myself, I will quote an explanation for the "Zexe: Enabling Decentralized Private Computation" paper, which I recommend for those who'd like to go beyond the concepts mentioned in this article.

The way to approach recursive proof composition would vary depending on the desired type. For bounded recursion, the n-chain of curves would be sufficient to compose proofs up to n levels, otherwise the cycle of curves must be used. A pair of curves that satisfy r1=#E2(F2)∧r2=#E1(F1) forms a 2-cycle. During proofs generation, one would have to alternate the instantiation of the proofs with two curves of the cycle so that their fields "match up". Only prime-order curves can form cycles and it's generally much harder to find cycles of pairing-friendly curves. Ideally, both curves in the cycle should have the same embedding degree k and same 2-adicity, like Pasta curves and unlike Tweedle curves.

The only known pairing-friendly cycles are formed by alternating MNT (Miyaji-Nakabayashi-Takano) curves of embedding degrees 4 and 6 using [KT07] method. Note, that due to low embedding degrees, secure curves in the MNT family must be constructed over very large (1024-bit) fields, which significantly downgrades the performances.

Methods for constructing elliptic curves

Complex multiplication method

Complex multiplication (CM) method is used to generate an equation for a curve with some given properties such as order n, embedding degree k, trace t, and fundamental discriminant D.

To construct an elliptic curve over Fq with n points:

- Start by choosing a prime power q and integers n,D,k,t.

- Find an integer solution (x,y) for the CM equation of form Dy2=4q−t2=4hr−(t−2)2.

To construct family of curves Fq(x):

- Parametrise t,r,q as polynomials: t(x),n(x),q(x).

- Find all solutions (x,y) to the CM equation in the polynomial form Dy2=4q(x)−t(x)2=4h(x)r(x)−(t(x)−2)2.

The output is coefficients A and B for the elliptic curve equation of the Weierstrass form (y2=x3+Ax+B).

Cocks-Pinch method

Cocks-Pinch (CP) method is used to construct pairing-friendly elliptic curves with arbitrarily chosen embedding degree; curves constructed using this method have ρ-value of approximately 2.

To use CP method:

- Choose a positive integer k and a integer r congruent to 1 modulo k, and fundamental discriminant D.

- Find trace t and prime q such that the CM equation is satisfied.

The output is a prime integer q such that there exist an elliptic curve E over Fq with an order-r subgroup and embedding degree k. If fundamental discriminant D≤1012 then E can be constructed using the CM method.

| Advantages | Disadvantages |

|---|

| order r can be chosen in advance | cannot construct curves of prime order |

| allows arbitrary embedding degree k | ρ≈2 (security cost is about twice the base field size) |

| many curves possible; easy to specify bit sizes | |

When to use CP method:

- For embedding known curve's base field into new curve's scalar field.

- When minimising ρ is not a priority.

The addition in double-and-add iteration (Miller's loop) of pairing operation will be executed more quickly when r has low Hamming weight (number of non-zero bits). Using CP method that allows for r to be chosen arbitrarily, this optimization can be exploited.

Dupont-Enge-Morain method

Dupont-Enge-Morain (DEM) method is similar to CP in that it produces elliptic curves with an arbitrary embedding degree, but in doing so it computes trace t and subgroup order r simultaneously using resultants.

To use DEM method:

- Choose embedding degree k and fundamental discriminant D.

- Find t and r simultaneously using resultants, then find cofactor h such that CM equation is satisfied.

The output is prime integers q and r such that there exist an elliptic curve E over Fq with an order-r subgroup and embedding degree k. If a=Dy2 with D≤1012 then E can be constructed using the CM method.

| Advantages | Disadvantages |

|---|

| effective for computing curves with arbitrary embedding degree k | more difficult to specify order r precisely as it found as a value of a certain polynomial |

When to use DEM method:

- When r is already defined by the polynomial in a higher-level application, otherwise use CP method instead.

- When t,r,q are parameterised as polynomials, then the ρ<2 can be achieved in resulting cyclotomic curve families (Fq is a cyclotomic field, r is a cyclotomic polynomial).

Miyaji-Nakabayashi-Takano method

Miyaji-Nakabayashi-Takano (MNT) method is used to sample a sparse family of elliptic curves with an arbitrary but limited embedding degree.

To use MNT method:

- Choose embedding degree k and fundamental discriminant D.

- Parametrise t(x), h(x) (set h(x)=1 if prime-order curve is needed).

- Compute r(x) and q(x) such that r(x)=q(x)+1−t(x) and q(x)=h(x)r(x)+t(x)−1.

- Find all solutions (x,y) such that CM equation is satisfied.

The output is a polynomial q(x) and r(x) such that there exist a set of elliptic curve E(x) over Fq(x) with h(x)⋅r(x) points and embedding degree k=3,4, or 6. If q(x),r(x) are both primes, then curves E(x) can be constructed via CM method.

| Advantages | Disadvantages |

|---|

| good for finding prime-order curves | embedding degree is limited* to k=3, 4, 6 |

| 128-bit security requires large (1024-bit) fields |

* extension methods allow k=10, 12

When to use:

- When a prime-order curve is needed.

- When looking for cycles of curves.

Twisted Edwards curves over the known field

There's no single method for finding curves with a given base field size q, but the general procedure is following:

- Fix the curve by choosing coefficient d.

- Try different a, until you find a curve over Fq with satisfiable subgroup size r.

- During the search it’s possible to constraint other parameters, e.g. cofactor.

An alternative well-generalized method is described "Twisted Edwards Elliptic Curves for Zero-Knowledge Circuits" and was used to find Baby-JubJub over BN256 scalar field. Authors first find a Montgomery curve of desired parameters and then transform it to a twisted Edwards curve, which is possible because both forms are birationally equivalent. See example code.

More about the Twist

Twist is a method of finding a twisted curve E′ over Fq which is isomorphic (equivalent) to a known curve E over Fqd such that it's possible to use the results of cheaper arithmetic over smaller Fq for computation on points of E that is defined over a larger field Fqd.

The minimal integer d for which E and E′ are isomorphic over Fqd is called the degree of the twist. There exist curves with: quadratic twist d=2, cubic twist d=3, and sextic twist d=6.

To find (quadratic) twist:

- Suppose you have a curve E over Fp with equation y2=x3+ax3+bx+c.

- Pick some d that is not a square mod p, i.e. there is no x such that x2−c is divisible by p.

- Define the twisted curve E′ with equation dy2=x3+ax3+bx+c.

To find higher-degree twists it's possible to stack multiple low-degree twists in a "tower" structure. For example, a sextic twist can be constructed by stacking two cubic twists: Fq3→Fq32→Fq6. Some operations such as pairing can be computed more quickly when performed over the extension field in tower form.

Advantages:

- Increases security of new curve while keeping the performance of origin curve, e.g. a twisted curve defined over Fqd may have a 214-bit security, but group operations could be computed in Fq instead of Fqd.

- Compression: in a twisted curve with embedding degree k and a degree-d twist the output of pairing can be given as an element of Fqk/d instead of Fqk, sparing log2d bits.

Methods for curve optimization

Ristretto method

Ristretto is a technique that can be applied to Edwards curves with cofactor h=4 or 8 to map their points of infinite order to points of prime order effectively creating prime order groups.

To use Ristretto:

- Define a new type for Ristretto points which contains the representative Edwards point.

- Add an equality function to compare both representations of the same point.

- Add encoding and decoding functions to represent a point in and from their corresponding bitstrings.

- Add a map from bitstrings to Ristretto points suitable for hash-to-point operations.

Advantages:

- Prevents certain attacks e.g. small subgroup attacks.

- Compressed points are more efficient to transmit and store.

- Preserves points' specific properties, which can be important for security.

- Can reduce cofactor h that can be exploited by attackers.

Gallant-Lambert-Vanstone method

Gallant-Lambert-Vanstone (GLV) method is a technique that can be applied to curves whose endomorphism ψ can be efficiently computed to significantly accelerate scalar multiplication.

To use GLV method:

- During curve generation fundamental discriminant must be restricted to −D≥−388 so that ψ can be efficiently computed.

- When implementing curve's arithmetic, scalar multiplication should be written according to GLV algorithm (example).

To start with elliptic curve development install SageMath - an open-source python-based math-oriented programming environment that offers a comprehensive suite of tools that are essential for generating and testing elliptic curves. Likely, there's no need to implement everything from scratch. The researchers from SCIPR Lab already developed and packaged many of the popular methods into the ecfactory plugin. Though the recommended installation method didn't work for me with SageMath 9.0+, so I opted to add pip installation support in my fork.

Security considerations are essential to elliptic curve development. To check that a given curve satisfies current security requirements use SafeCurves, which essentially is a set of criteria for evaluating the security of elliptic curve cryptography. The criteria include standards for the properties of the curve itself such as its order, shape, and rigidity to various attacks, as well as properties of the underlying field such as size, stability, randomness, etc. The describe-curve tool can further help with SafeCurves checks but at the time of writing, it's still under development.

Resources

Demo

Here is the SageMath script for finding a pairing-friendly elliptic curve whose scalar field embeds the base field of Curve25519 using the Cocks-Pinch method.

Summary

The advances in blockchains and zero knowledge cryptography created a demand for new application-specific elliptic curves. This became especially relevant for arithmetic circuit development and recursive proof composition.

To reduce prover overhead when the verifier operates over a specific curve, the application can be expressed over a twisted Edwards whose base field matches the verifier's curve.

When the application logic requires certain curve arithmetic, then the same optimization is possible by using Cocks-Pinch or DEM methods to find a compatible pairing curve whose scalar field embeds application's curve base field.

To perform efficient recursive proving, an elliptic curve cycle is needed, which can be obtained using MNT method. A slightly more performant approach relies on Cocks-Pinch curves that form a chain, but this way the recursion depth is limited.

For developing elliptic curves use SageMath with ecfactory. To evaluate the security of newly founded curves use SafeCurves and describe-curve tools.